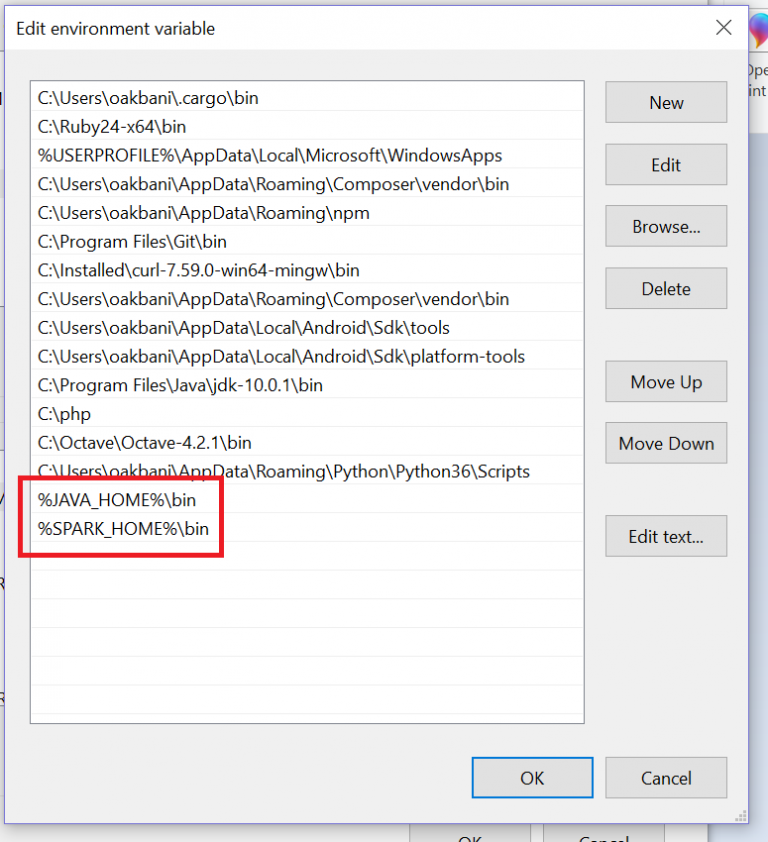

You must install the JDK into a path with no spaces, for example c:\jdk. Install a JDK (Java Development Kit) from l.: Īt .report(Unknown Source)Īt .get(Unknown Source)Īt .ShutdownHookManager.executeShutdown(ShutdownHookManager.java:124)Īt .ShutdownHookManager$1.run(ShutdownHookManager.java:95)Ĭaused by: Īt .ShuffleBlockPusher$.(ShuffleBlockPusher.(We have discontinued our Facebook group due to abuse.) Installing Apache Spark and Python Windows: (keep scrolling for MacOS and Linux) Īt 0(Native Method)Ĭaused by: 圎xception: Illegal character in path at index 32: spark://DESKTOP-H3SRII2:63213/C:\classesĪt $Parser.fail(Unknown Source)Īt .ExecutorClassLoader.(ExecutorClassLoader.scala:57)Ģ2/05/18 22:50:09 ERROR Utils: Uncaught exception in thread shutdown-hook-0Īt .Executor.stop(Executor.scala:333)Ĭaused by: Īt .ShuffleBlockPusher$.(ShuffleBlockPusher.scala:465)Īt .ShuffleBlockPusher$.(ShuffleBlockPusher.scala)Ģ2/05/18 22:50:09 WARN ShutdownHookManager: ShutdownHook '$anon$2' failed, : using builtin-java classes where applicableĢ2/05/18 22:50:09 ERROR SparkContext: Error initializing SparkContext.

For SparkR, use setLogLevel(newLevel).Ģ2/05/18 22:50:07 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform. To adjust logging level use sc.setLogLevel(newLevel). Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties I have already installed the version of java compatible with apache spark, phyton and scala. Hello I am trying to install apache spark, but I get this error.

0 kommentar(er)

0 kommentar(er)